Here’s the rundown on what’s happening in social media as of February 10, 2026. The biggest stories span legal battles over platform design, AI‐powered features, global age restrictions, and platform innovations.

Landmark Child Addiction Trials Kick Off in California

A high-profile trial has begun in Los Angeles, where Meta (Instagram) and Google (YouTube) face accusations of deliberately addicting children via manipulative design, from “likes” to endless scrolls. Attorneys likened the platforms’ tactics to casinos and drugs, citing internal documents such as Meta’s “Project Myst.” This case, centered on a young plaintiff identified as KGM, could reshape legal and regulatory norms around youth social media use.

Parallel litigation is underway in New Mexico, targeting similar harms. These cases may interrupt long-standing protections like Section 230, and significant company testimony—including from Meta’s leadership—is expected.

TikTok Parent Unveils AI Video Generation Tool

ByteDance, TikTok’s parent company, introduced a new generative AI video creation feature. This marks a leap toward more immersive, AI‑driven content experiences—potentially changing how creators engage audiences.

Discord Implements Age Verification Next Month

Discord is rolling out mandatory age verification. By default, users will be placed in a “teen‑appropriate experience” unless they can prove they’re adults. It’s a clear effort to better control age‑sensitive content access.

Global Push: Age Restrictions Gain Momentum

- Spain announced plans to ban access to social media for users under 16, citing a need to shield youth from a “digital wild west.”

- Australia’s already-enacted law bans minors under 16 from holding social media accounts. It came into force in December 2025, applying in practice across platforms within a year.

- In the U.S., South Carolina signed the Age-Appropriate Design Code requiring platforms to report age verification practices to the attorney general.

This mounting regulatory pressure reflects a growing global push to protect minors online.

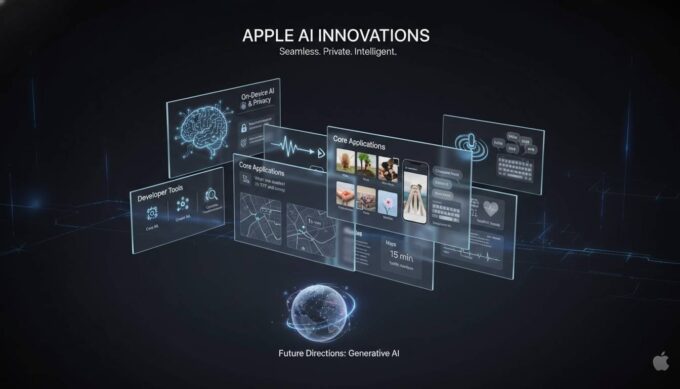

New AI‑Heavy Platforms: Moltbook and Moltbook–Style Networks

Emerging platforms are evolving. Moltbook is a bot-operated social network where AI agents post and interact—humans watch, but can’t participate. It raises fresh questions about the nature of online communities.

Similarly, a platform hosting thousands of chatbots interacting among themselves has sparked a “robotic uprising” debate: is this the next frontier—or a content arms race?

Upscrolled Hits 2.5 Million Users

Upscrolled, a six-year-old challenger network, announced it has surpassed 2.5 million users. Growth picked up rapidly—they had just 150,000 users in January. This signals that even smaller platforms can find unexpected traction.

Summary of Today’s Highlights

| Category | Key Developments |

|————————-|———————————————————————————-|

| Legal / Regulation | California and New Mexico trials; Spain and South Carolina age restrictions. |

| Platform Features | ByteDance’s AI video tool; Discord age verification. |

| New Platforms | Moltbook and bot‑driven social interaction; Upscrolled’s growth. |

Thoughtful Insight

“We’re watching a crossroads in social media—where legal scrutiny, AI transformation, and global regulation are reshaping the landscape in real time.” — Industry analyst

What It All Means

- For users: Expect more age-based filters and AI-guided experiences.

- For platforms: Legal risk is growing—especially around youth addiction. New features must balance engagement with ethics.

- For businesses: Rising regulations demand compliance. AI tools and alternative platforms offer fresh opportunities, but also require caution.

FAQs

Are social media companies being prosecuted for targeting children?

Yes. Litigation in California and New Mexico alleges that platforms like Instagram and YouTube engineered addiction in children. These cases may lead to tighter industry rules.

What new tools are TikTok and its owners developing?

ByteDance just launched a generative AI video creation tool. It could change how content is made, with more automation and creativity fused.

Is Discord changing how minors access content?

Yes. Discord will default minors into a restricted, “teen‑appropriate” experience. Only verified adults will gain full platform access.

Which countries are limiting kids’ access to social media?

Spain plans to ban under-16s from social media. Australia implemented a similar law late 2025. South Carolina also passed a law requiring platforms to report age controls.

What’s Moltbook?

Moltbook is a novel social network where AI bots interact with each other. Humans observe. It’s a peek into AI-only spaces and raises discussion on authenticity.

Who’s Upscrolled?

A niche social network that recently exploded past 2.5 million users, showing small platforms can gain momentum fast.

Concluding Summary

Today’s social media landscape is in flux—driven by legal scrutiny over youth harm, advancing AI features, new age controls, and inventive platforms. These shifts aren’t minor. They’re reshaping how people create, consume, and govern online spaces. As regulation tightens and tools evolve, the path forward will demand care, creativity, and resilience from every player in the digital sphere.

Leave a comment