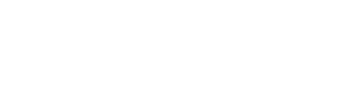

Artificial intelligence has reached a point where its new feats aren’t just impressive—they’re sparking deep ethical debates about privacy, responsibility, and the future of decision-making.

It’s not just science fiction anymore. Advanced systems now translate nuances in emotion, predict personal outcomes, and even mimic human reasoning in unsettling ways. That’s pushing us to rethink who’s accountable and where the lines really fall.

Let’s walk through these breakthroughs, the murky ethics they stir, and why it matters now more than ever.

Unsettling Capabilities: What Just Changed

AI today is doing things we’d only imagined a few years back. It’s analyzing voices to guess moods. It’s spotting unseen patterns that let it project criminal behavior or mental health risks. On top of that, AI can craft text, art, or decisions that feel deeply human.

This isn’t hype. A growing number of tools make these leaps possible. They’re not equipped with emotions or understanding—but they sure act like they do. And that’s part of the problem: it blurs the boundary between tool and confidant.

Real-World Snippets

- Law enforcement agencies are experimenting with predictive policing. Systems highlight neighborhoods or groups at higher risk for crime.

- Mental health platforms use AI to flag suicidal thoughts in customers’ messages.

In both examples, the tech can do good—or cause unseen harm. And that’s where ethics begin to loom large.

Ethical Risks Unpacked

Bias and Misjudgment

AI’s decisions are built on data—past data. If the past is biased, the future stays biased. Predictive systems might unfairly target marginalized communities or misidentify intent based on skewed inputs.

Privacy vs. Benefit

Reading someone’s stress level or emotional pulse has value. It can help mental health professionals intervene early. But it also feels invasive. Where do we draw the line between helpful and creepy?

Accountability in a Fog

When an AI “knows” more about you than you do—where does responsibility lie? If a system flags someone as “risky” and that leads to real harm, who’s to blame: the developer, the user, the system itself?

“When tech starts predicting behavior, we edge closer to fate, not free choice,” warns a leading ethicist. That sums up the uneasy core of this moment.

Big Picture: Why It Matters

These breakthroughs aren’t abstract. They’re real, and they’re spreading fast. Businesses use them for hiring. Lawmakers discuss them in legislation. Healthcare explores them for diagnoses.

Putting them in place without reflection means mistakes get baked in. Societies risk losing trust. And people risk losing agency.

Practical Approaches: What Can Be Done

Transparent Design

Tools should come with clear explanations—what they do, how they do it, and their limits. A user should understand why a result surfaced.

Independent Audits

Third-party reviews to test for bias or unfair outcomes should be standard. It helps check blind spots developers might miss.

Inclusive Development

Building diverse teams reduces blind spots in design. Lived experience adds a lens many data scientists simply don’t have.

Oversight and Regulation

Policy can’t chase tech; it needs to guide it. Laws should focus on high-risk uses—like criminal justice, healthcare, and hiring. Meanwhile, industry can set voluntary guardrails ahead of regulation.

Case Study: A Hiring Tool Gone Wrong

A major tech firm once used AI to screen job applications. The system learned from past hires—and those had skewed heavily toward one gender. As a result, it started grading resumes in ways that favored that same gender profile.

Red flags went unnoticed until concerns were raised about unfairness. The company pulled back, recalibrated the model, and added human checks.

That episode shows how easy it is to build bias into AI—and how disruption gets unchecked when teams don’t flag early risks.

Building Trust in the Age of Sentient-Looking AI

People accept tools when they trust the process. Here’s what builds trust:

- Clear communication: Let people know what the AI does—not just the output, but the reasoning.

- Oversight loops: Human review keeps the system from running unchecked.

- Contextual limits: Some uses shouldn’t be automated. Sensitive decisions—like mental health diagnosis—need actual humans.

Trust isn’t automatic. It’s earned step by step.

Conclusion

AI’s newest moves push technical boundaries, sure. But more than that, they cross ethical fault lines we can’t ignore. Bias, privacy, accountability—these aren’t theoretical anymore. They’re at stake in choices made today. Meeting the moment means designing with intention, oversight, and inclusion. That’s how trust grows—and how society keeps control of tools meant to serve it.

FAQs

What breakthrough is pushing AI into new ethical territory?

AI reading emotions, predicting behavior, and mimicking human reasoning are all pushing into areas we once thought off-limits. These made ethical reflection urgent.

Why is AI decision accountability so hard?

Because AI lacks consciousness. It acts on patterns, not intention. If something goes wrong, tracing who’s responsible—developer, user, or system—gets complex.

Can regulation keep up with AI’s pace?

Regulation often trails tech. That’s why a mix of oversight, voluntary standards, and early audits is crucial. Thoughtful laws targeting high-risk areas help too.

How can bias sneak into AI tools?

Bias enters through skewed training data. A hiring system trained on one kind of candidate might inadvertently favor them again, perpetuating inequality.

What builds public trust in advanced AI?

Transparency, human review, and clear communication help. Showing how decisions are made—and letting people contest them—makes AI feel less like a black box.

Should we limit AI use in sensitive fields?

In areas like mental health, criminal justice, or hiring, human judgment remains essential. AI can assist, but shouldn’t be trusted alone.

Leave a comment