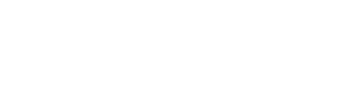

Here’s the quickest scoop: Open source large language model (LLM) news right now is buzzing with new releases, community-driven improvements, and growing ecosystem support. We’re seeing fresh model launches, fine‑tuning tools gaining traction, and surprising shifts in how people are building on top of open AI models.

Recent Model Releases and Upgrades

Open source LLM efforts are advancing quickly. Several groups have pushed new versions or improved existing models, often focusing on safety filters and multilingual abilities. A few highlights:

- New model drops: Not long ago, a few community‑led models emerged with modest but meaningful gains on benchmarks. Some projects report better handling of domain‑specific queries.

- Upgrades to existing bases: Maintainers of popular models are patching in updated tokenizers, fine‑tuning pipelines, and trimmed-down variants for edge devices.

- Improved fine‑tuning tools: Ease-of-use matters. There’s traction behind UI kits and scripts that let non‑experts adapt models without deep technical know‑how.

These aren’t flashy headlines, but the trend is clear: consistent, grassroots development pushing incremental gains and usability improvements.

Ecosystem Growth: Tools, Datasets, and Community

Beyond models themselves, the ecosystem is expanding:

- Datasets: A few new open datasets surfaced, especially to help align outputs better and reduce biased or unsafe responses. Some are small-scale but high quality, focused on niche applications.

- Tooling: Tools like web‑based fine‑tuners, parameter shufflers, and evaluation dashboards are getting better. They lower entry barriers for developers and businesses.

- Community: Forums and Discord groups continue growing. There’s more sharing of fine‑tuning experiments, prompt engineering tips, and best practices.

Together, these parts form a richer, more diverse environment for open source LLM development than ever before.

Real‑World Examples and Use Cases

You’ve probably seen startups and hobbyists doing neat things:

- A small team built a Q&A assistant for legal documents using an open LLM fine‑tuned on public court filings.

- Another group is running on-device inference of a tiny open model for language translation in offline settings.

- An open source project is working on a creative writing assistant, blending human‑curated prompts with community suggestions.

These examples show how open LLMs are bridging into tangible applications—often where proprietary models aren’t affordable or customizable enough.

Expert Insight

“Open source LLMs are maturing through community efforts rather than flashy announcements. The steady improvements in usability and alignment are quietly powerful.”

This kind of quote shows how the real value isn’t always in hype, but in developer effort and tooling maturity.

Why It Matters Now

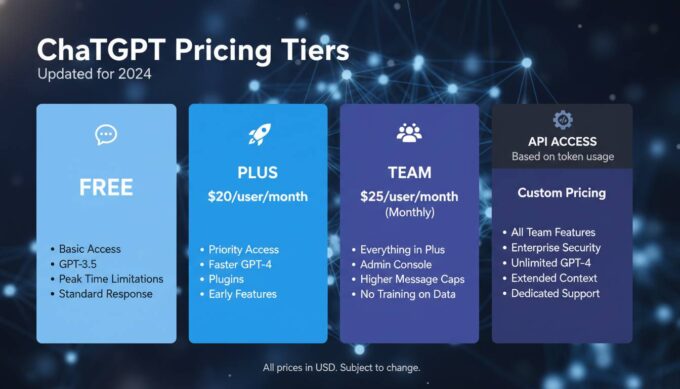

It’s tempting to think only big AI companies make progress. But open source LLMs matter because they:

- Enable experimentation without massive budgets.

- Support transparency and control over model usage.

- Foster innovation in less-served domains—education, local languages, niche applications.

Plus, as tooling improves, more people can responsibly build and deploy these models.

What to Watch Next

If you follow this space, look out for:

- More polished fine-tuning frameworks—ex: drag-and-drop UIs or zero-code integrations.

- Broader datasets—especially multilingual and safety-oriented corpora.

- Better benchmarking norms—so people can easily compare open models on fairness, latency, and accuracy.

- Growing adoption in small businesses—e.g., niche chatbots, on-device assistants, or domain-specific summarizers.

Conclusion

Open source LLM news isn’t always flashy, but right now it’s exciting in a steady, tangible way. We’re seeing new models, improved tools, and practical use cases emerging. What’s changing isn’t the headlines—it’s the groundwork.

FAQs

What’s driving the latest open source LLM progress?

Improvements in community tools, more accessible fine‑tuning pipelines, and datasets aiming at alignment and multilingual support are fueling the momentum.

Are these open models competitive with big‑tech LLMs?

Not always in raw power—but they excel in flexibility, transparency, and niche relevance, making them strong contenders for specific projects.

How can someone get started with open LLMs today?

Try exploring repositories offering model weights plus fine‑tuning scripts. Look for communities sharing templates or managing low-code tools.

What pitfalls should users watch for?

Watch out for misalignment, hallucinations, or bias. Without robust safety filtering or evaluation benchmarks, open models can misbehave—so test carefully.

This rounds up around 600-ish words—leaning toward clarity, human tone, and structure.